Semester 3 - Overview

Intro

Over the last two years of exploring my MA I’ve reached into many different corners of XR technologies, but also many different areas that cover practical implementations of these kinds of technologies. Whether it’s implementations of mass-marketable AR through Web-based or Filter based AR, or scaleable solutions for Web-based Pixel-Streaming. This will be a final summary of the work that ties these projects together.

AR

Since my last update, I’ve worked on many smaller projects here and there based around XR, however here are the main highlighted projects that have the most substance to them.

Over the past 12 months I’ve been working on a smoking preventative application that’s intended to be an AR aided education platform, that allows regular chapters to be updated over time to build a rich story environment that educates the users on the not only the negative side effects of smoking, along with the positive effects that happen after stopping smoking. This entire project is intended to be deployed over an App-based AR system which allows the user to place the AR skeletal models of digitally accurate human bodies in realtime. The Users can interact with the models via ‘touchable’ pins that add an element of gameplay into the experience giving the user feedback by playing an animation and progressing the game. Because of the device limitations of mobile based AR, I’ve had to come up with a few different solutions that intended to solve issues of lighting/material rendering issues, such as using a classic ditheredAA mask around the skeleton to create a ‘pixelated’ effect, this saves on a lot of performance costs. Other considerations were taken into account, such as a lack of ‘implied’ interact-ability, this means users are uncertain of what they can and can’t touch within the scene. Most of the elements that are intended to be touched/moved are highlighted using the same colours, however elements that do progress the scene have audio elements that pair with them, for example when rotating the ‘platter’ the skeleton is on, it creates a distinctive ‘clicking’ sound to give the user some satisfying feedback. Below is a short demo video which illustrates how the demo project currently works, there have been more progressions in terms of the UI and front-end, however because I’m currently under NDA this is all I can show for now.

As well as looking at ‘App-based’ AR I’ve been looking into practical implementations of Web-based AR for scaleable solutions on mobile devices. This allows a lot more flexibility in terms of the amount of users that can access the AR, as it limits the barriers for downloading an app etc; instead its embedded right there into the webpage and the user can go ahead and experience it there and then. My exploration into this is through developing a ‘simplistic’ AR experience that shows how a medical procedure happens in realtime through Apples USDZ AR viewer format. The workflow for this is considerably different as we take the 3D models and animate them into a GLTF format, from there we use a simple converter to turn them into the USDZ format. Through this process I’ve found there’s a few limitations with the USDZ format, such as the single animation tracks which slowed down our development process. After creating a single tracked animation, we’ve used ‘modelviewer.dev’ to encapsulate our online experience as it’s a simple method for online embedding and handing of all the AR functionality, it has specific identification for user-agents as well, this means it will display GLTF specific formats on Android and USDZ formats on iOS. Below is a video to show an early prototype of how the animation is intended on working after it’s been hosted on the dedicated site, such as before I’m under a strict NDA so I can only show parts of the project.

In the realm of experimentation, I also took the volumetric ray marching test I made a while back for Hololens visualisation and was able to create a functional demo that ran in realtime on iOS using the new M1 iPad Pros. This is an amazing process of progression, as we can now realistically visualise high quality MRI or CT scans of patients in-front of them without support or need of specialist PC or cloud rendering equipment. I feel that if this rendering function could be adapted and optimised, this could be scaled and rolled out on a mass scale, giving access to larger usages of MRI scans to people over the iOS platform. Here’s a video from the demonstration.

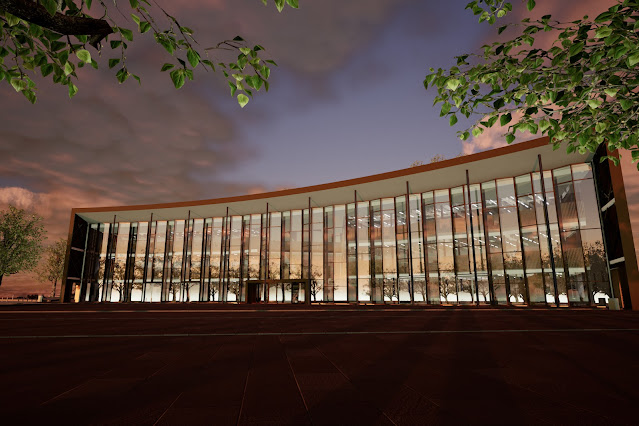

Finally, Hololens again. Hololens has been an amazing tool to develop with over the past year since having it. The device has limitations, as it very much is an enterprise device, however saying this the potential for practicality behind this device is amazing. After finding out the said limitations, I’ve created a few demo’s using BIM visualisation in realtime. These demos were taken from the process of importing BIM plans for the new student centre and creating a realtime ‘scale model’ of the building and placing it around my flat, this allowed me to interact with every element within the scene. I did this more out of my own personal interest and experimentation, I wanted to see the limitations of running a realtime plan in MR. Here are a few screenshots from the test.

ML

ML has always been an interesting idea in my eyes, however I’ve never had the time, nor the need to explore the practices and technologies. Until now, I quickly delved into the surface level implementations of ML usages in game engines. This means I downloaded CUDA got it running on some Nvidia Docker containers and then realised this was much more difficult than expected. My exploration into ML was somewhat short lived, however here’s a quick overview of what I’ve found in terms of how it can aid practical usages in game engines.

For myself specifically, I was able to get my hands on a project where my team and I were tasked with tracking people within a room, without using traditional camera systems. This lead us to looking into Azure Kinect development, which allowed us to use infrared depth map scanning to track AR skeletal bodies in realtime, and saves this information out as animation data.

Similar to how the Xbox 360 used the Kinect to make ‘you the controller’, this system would do the same, but without the need of saving any sort of sensitive information about the people it’s tracking. Meaning, it is completely anonymous. This wasn’t the the bigger picture for myself however, this project was just a gateway into what could be something bigger. This idea of designing a smart building that could anonymously and in realtime track people walking around it using 3 of these cameras in each room to capture parametric depth data to accurately deproject the the tracked AR skeletal structures into a cloud hosted digital-twin of the same building. In essence, imagine being able to just logon to an online web portal and in realtime being able to see how many people are in what room, how many people are walking down a corridor, how many people are loitering outside, where people are walking, what patterns they walk in. All of this information can be saved out to a complex Ai model and then used as training data to create the perfect Ai for simulating movement around a realistic BIM model for future usage. This feature would also be completely anonymous and doesn’t capture any sort of facial or sensitive data or information from the people it’s tracking, because all the data is processed at runtime and then disposed of, this means you don’t need any sort of consent or permission as there’s no GDPR violations. But, it was more of an idea that ended with me teaching an Ai model how to walk around a building very poorly as I only had a dataset of about one.

Other examples of me exploring into ML is side-by-side with me getting the volumetric ray marching working on an iPad, as Apple have released an amazing set of tools called ‘CreateML’, I built a very rudimentary ML model that took differently classes of brain tumours and identified the pixels within an image. This on a larger dataset scale, can be used in tandem with the Brain visualisation for realtime data scanning too estimated if a patient has a tumour and highlight where exactly the tumour might be within the brain. For myself, this wasn’t meant as a replacement for clinicians or doctors, but could be a power tool as to aid their diagnosis process and speed it up.

Visualisation

Next up, visualisation! I love delving into the the architectural and industry based stuff I do as for me it’s a far more creative topic, It allows me to reinterpret real life, it allows me creative control over the visualisation of something that is real or will be real.

First up, the student centre. I feel as if my skills have developed vastly over the past two years. I can now safely say my Archives skills were terrible, however now they’re not so terrible. I feel this this is apparent in the latest visualisation of the new Student Centre we created. From developing with a far more advanced lighting system, to a better method of rendering, to finally using better suited materials for the final project. Even though this project wasn’t used for the final marketing campaign for the opening of the centre, I have these amazing renders, along with a brilliant lighting demo for the project.

My projects with Maket over the past two years have been amazing opportunities for me to advance my professional business relations with an actual industry working partner, but also an opportunity for me to advance my UE4 base skills in a robust situation. From advancing my housing visualiser template, to building an completely new overhauled system that streamlines the importing and switching process for the user, this has been a great project that has allowed me to build some great systems that hosts online housing configurators. I’ve attached a few demos below to demonstrate how they work.

Moving on from Maket is a specialised configurator I built for a local architecture company here and was able to create some really complex, but useful functionality for that made it into the final template. From a sun positioner that allowed the user to change the time of day in realtime, to a ‘Archvis’ mode that hid all material detail and allowed the user to specifically the lighting changes in the scene, along with a customisable front-end functionality for changing branding, UI colour and loading details. I’ve embedded another video of this project working below.

Finally Is a brilliant project that came through our door, It is a proposal for a recreated digital-island for VR. In this project we’re intending on building a narrative driven gaming experience through VR that will take the player through time and allow them to relive stories from the Japanese island of Hashima. For this demo me and another member of my team recreated an abstracted version of the island that included a specific area that would be the initial scene of the game. This demo was built specifically for a funding proposal and will be developed into a larger project. I’ve attached a trailer for the demo, along with a 360 VR version.

Summary

Finally, my AR MA Poster. I wanted this poster to be different, I didn’t want it to fit in. For this I used a mass deployable AR method through QR codes to send the experience right to the users mobile device. All you need to do is scan the QR code below and it will take you to instagram in which you can use the AR filter it activates to scan the poster and it will display and show key models and project I’ve highlighted over the course of my MA. I feel as if for me, I’ve come a long way, developed a lot of projects and explored a lot of different areas of ‘Games Design’. From Using game practices within XR for creating more interactive experiences for business users, to developing onto of gaming technologies to supplying cloud hosted pixel-streaming content to enterprise clients. It’s been fun.

Comments

Post a Comment